Modern data workflows involve numerous interconnected tasks, dependencies, and schedules. Manually managing these workflows or using basic schedulers like cron jobs quickly becomes inefficient as complexity grows. This is where Apache Airflow comes in – a tool designed to orchestrate workflows efficiently.

In this introduction, we’ll go over the following questions and topics:

- What is Apache Airflow?

- Why Use Apache Airflow?

- When Should You NOT Use Airflow?

- Core concepts of Airflow

- Core components of Airflow

- Architecture diagram of Airflow

What is Apache Airflow?

Apache Airflow is an open-source platform designed to help you develop, schedule, and monitor batch-oriented workflows. With its extensible Python framework, you can build workflows that connect to virtually any technology. A user-friendly web interface makes it easy to manage and monitor the state of your workflows. Whether you’re running a single process on your laptop or deploying a distributed setup to handle massive workflows, Airflow is flexible enough to meet your needs.

At its core, Airflow follows a “workflows as code” philosophy. This means all workflows are defined in Python code, offering several key benefits:

- Python based: Workflows are written in Python, making them highly dynamic and flexible.

- Scalable: Runs on a single machine or scales up to distributed execution.

- Extensible: Connects with various technologies through built-in and custom plugins.

- Visual Monitoring: Provides a web UI to track workflow execution and debug issues.

- Flexible: Built-in support for the Jinja templating engine allows for powerful workflow parameterization.

Whether you need to run a simple task every day or orchestrate thousands of data pipelines, Airflow gives you the control and flexibility to manage workflows efficiently.

Why Use Apache Airflow?

Airflow is designed for batch-oriented workflows, where tasks have a defined start and end and run on a schedule or based on dependencies.

Key Benefits of Airflow

- Version control: Track changes and roll back if needed.

- Collaboration: Multiple engineers can work on workflows simultaneously.

- Testing & Debugging: Write tests to validate logic before execution.

- Rich scheduling capabilities: Automate jobs to run daily, weekly, or custom intervals.

- Backfilling support: Re-run workflows for past dates if logic changes.

- Error handling & retries: Automatically retry failed tasks without manual intervention.

- Monitor pipelines & task statuses at a glance.

- Trigger, pause, or rerun workflows from the UI.

- Inspect logs & troubleshoot errors easily.

- Active contributions from major tech companies (Airbnb, Netflix, etc.).

- Rich learning resources (blogs, conferences, books).

- Support via Slack, mailing lists, and forums.

- Build custom operators, sensors, and plugins tailored to your environment.

- Integrate with cloud providers, databases, APIs, and more.

If you need a reliable, scalable, and flexible workflow orchestration tool, Airflow is a strong contender.

When Should You NOT Use Airflow?

While Airflow is a powerful tool, it’s not always the best choice. Here’s when you might need to look elsewhere:

- Real-Time Processing: Airflow is batch-oriented, meaning it schedules workflows at specific intervals. It does NOT handle event-driven real-time data streaming like Apache Kafka. However, you can integrate both Kafka for event ingestion and Airflow for batch processing.

- Sub-Minute Task Scheduling: Airflow’s scheduler is designed for tasks running at intervals of minutes or longer. If you need workflows that run every few seconds, consider alternatives like Celery Beat or serverless event-driven architectures.

- Heavy Data Processing: Airflow orchestrates workflows, but it does not process large datasets directly. If you need heavy computation, use Spark, Flink, or Dask for processing and let Airflow manage job execution.

- Click-Based Workflow Management: Airflow follows a “code-first” approach, meaning you define workflows using Python. If you prefer low-code or UI-based workflow tools, options like AWS Step Functions or Google Cloud Workflows might be better.

- Simple, Linear Workflows: If your workflow is just a few sequential tasks without dependencies, a simple cron job or cloud-native scheduler might be more efficient.

Core concepts of Airflow

Understanding Apache Airflow starts with its core building blocks. These fundamental concepts define how workflows are created, scheduled, and executed within Airflow.

1. DAG (Directed Acyclic Graph)

A DAG is the blueprint of a workflow in Airflow. It is a collection of tasks organized in a way that defines their execution order and dependencies.

- Directed: Tasks flow in a specific direction.

- Acyclic: There are no loops (a task cannot depend on itself).

Think of a DAG as a workflow diagram where each step (task) follows a logical sequence without cycles.

2. Operators

An Operator defines what a task does in Airflow. It represents a unit of work, such as running a Python script, executing a SQL query, or triggering a Kubernetes pod.

Popular Operators:

- PythonOperator: Runs a Python function.

- BashOperator: Executes shell commands.

- SQLExecuteQueryOperator: Runs SQL queries.

Operators are like templates for tasks, you provide arguments (such as file paths, commands, or parameters), and Airflow handles execution.

3. Tasks

A Task is an instance of an Operator within a DAG.

- Operators define what to do, while Tasks define how and when to execute the work.

- Tasks are the actual execution units in Airflow.

Each task is a specific step in your workflow with assigned logic, dependencies, and execution settings.

4. Sensors

A Sensor is a special type of Operator that waits for an external condition to be met before proceeding.

- Unlike normal tasks, sensors pause execution until they detect a trigger, such as: A file appearing in a directory. A database entry being updated. An API returning a specific response.

Sensors are useful for workflows that depend on external events (e.g., waiting for data arrival before processing it).

5. Workflow

A Workflow in Airflow refers to the entire process defined by a DAG, including:

- The tasks it contains.

- The dependencies between tasks.

- The scheduling and execution logic.

In Airflow, a DAG is the workflow, defining how tasks execute and interact with each other.

Core components of Airflow

Apache Airflow’s architecture is made up of several components, each playing a crucial role in scheduling, executing, and monitoring workflows. Some components are required for a minimal Airflow installation, while others are optional and help improve performance, scalability, and extensibility.

Required Components

A basic Airflow installation includes the following core components:

1. Scheduler (The Brain of Airflow)

The Scheduler is responsible for:

- Triggering scheduled workflows (DAGs) at the right time.

- Submitting tasks to the Executor for execution.

The Executor (which determines how tasks are executed) is configured within the scheduler but is not a separate component. Airflow provides multiple built-in executors, and users can create custom executors if needed.

2. Webserver (The UI for Monitoring & Management)

The Webserver provides a user-friendly web interface to:

- View & manage DAGs and tasks.

- Trigger workflows manually.

- Inspect logs & debug failures.

The web UI is an essential tool for monitoring and troubleshooting workflows.

3. Metadata Database (Airflow’s Memory)

Airflow relies on a metadata database to store:

- Workflow states (e.g., running, success, failed).

- Task execution history.

- DAG configurations.

A properly configured database backend is required for Airflow to function. The database ensures that Airflow components communicate efficiently and keep track of execution history.

4. DAG Folder (Where Workflows Are Stored)

Airflow reads all workflow definitions (DAGs) from a designated folder. The Scheduler regularly scans this folder to:

- Identify new DAGs.

- Detect updates to existing DAGs.

- Determine when tasks should be executed.

DAGs are defined as Python scripts and stored in this directory for automatic discovery by Airflow.

Optional Components

While the core components are enough to run Airflow, the following optional components enhance its scalability, extensibility, and efficiency.

1. Workers (For Distributed Task Execution)

Workers execute tasks assigned by the scheduler.

- In basic installations, the worker is part of the scheduler process.

- In CeleryExecutor, workers run as long-running processes, allowing tasks to be distributed across multiple machines.

- In KubernetesExecutor, tasks run as Kubernetes pods, enabling dynamic scaling.

Workers improve parallel execution and resource management.

2. Triggerer (For Asynchronous Task Execution)

The Triggerer runs deferred tasks using an asyncio event loop.

- It is not required unless you use deferrable operators, which help optimize resource usage for long-running tasks.

- If no deferred tasks are used, the triggerer is unnecessary.

Deferrable tasks allow Airflow to free up resources instead of constantly checking for task completion.

3. DAG Processor (For Efficient DAG Parsing)

The DAG Processor is responsible for parsing and serializing DAG files into the metadata database.

- By default, the scheduler handles DAG processing.

- However, for large-scale deployments, the DAG Processor can run separately to: Improve scalability by reducing scheduler load. Enhance security by preventing direct DAG file access from the scheduler.

When a DAG Processor is used, the scheduler doesn’t need to read DAG files directly.

4. Plugins Folder (Extending Airflow’s Capabilities)

The Plugins Folder allows users to extend Airflow by adding:

- Custom Operators: Define new task types.

- Custom Hooks: Integrate with external systems.

- Custom Sensors & Triggers: Improve event-based workflows.

Plugins are loaded by multiple Airflow components, including the Scheduler, DAG Processor, Triggerer, and Webserver.

Apache Airflow Architecture

Apache Airflow can be deployed in various architectures, ranging from a simple single-machine setup to a fully distributed environment with multiple components and user roles. The choice of architecture depends on scalability, security, and operational complexity.

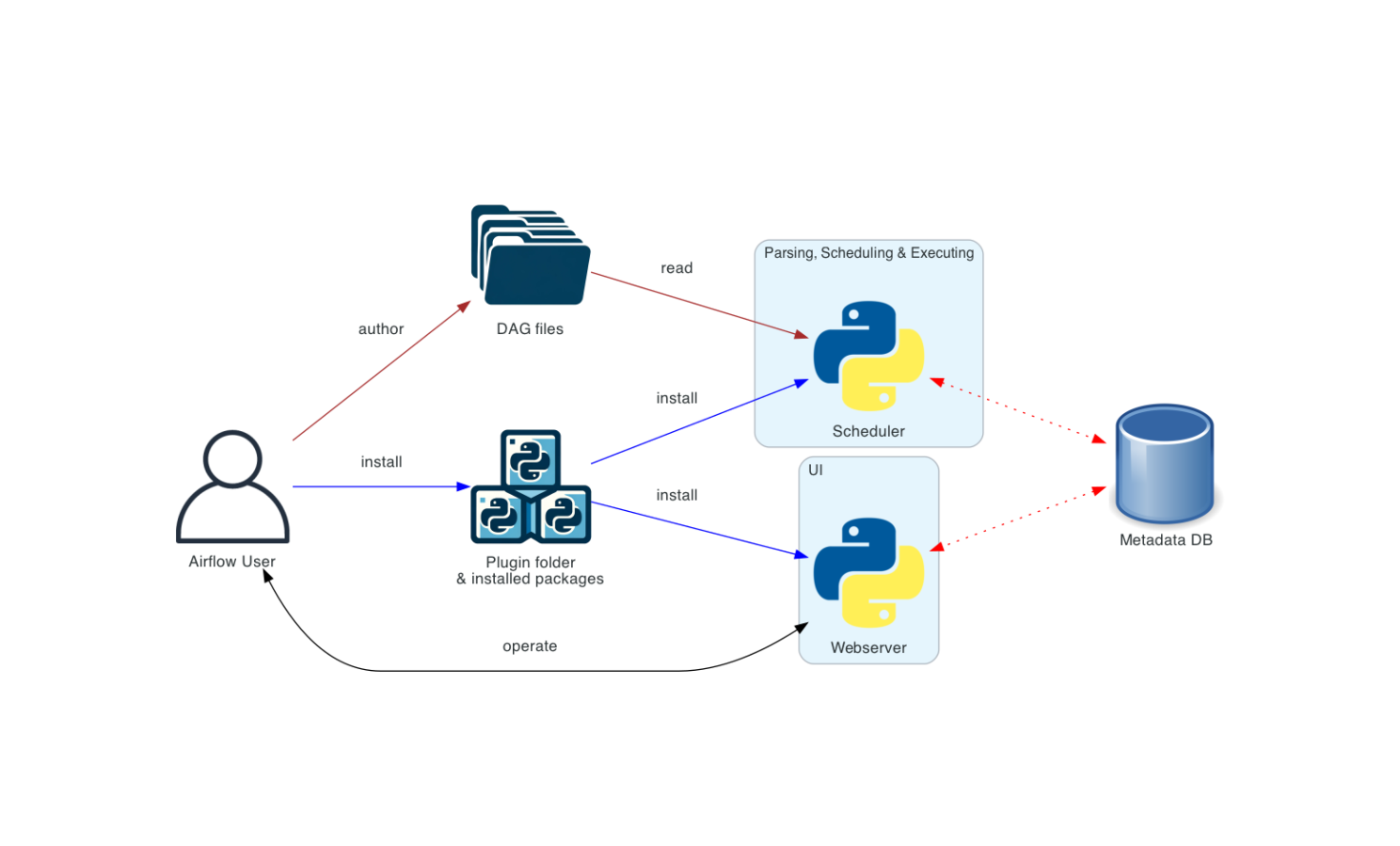

Basic Airflow Deployment (Single Machine Setup)

The simplest way to run Airflow is on a single machine, where all components run within the same environment.

Key Characteristics

- Uses LocalExecutor: The Scheduler and Workers run in the same Python process.

- DAG files stored locally: The Scheduler reads DAGs directly from the local filesystem.

- Webserver runs on the same machine: Provides UI access for monitoring.

- No Triggerer component: Deferred tasks are not supported.

- Single-user operation: The same user handles deployment, configuration, and maintenance.

This setup is best for small-scale projects, development environments, or individual users testing Airflow locally.

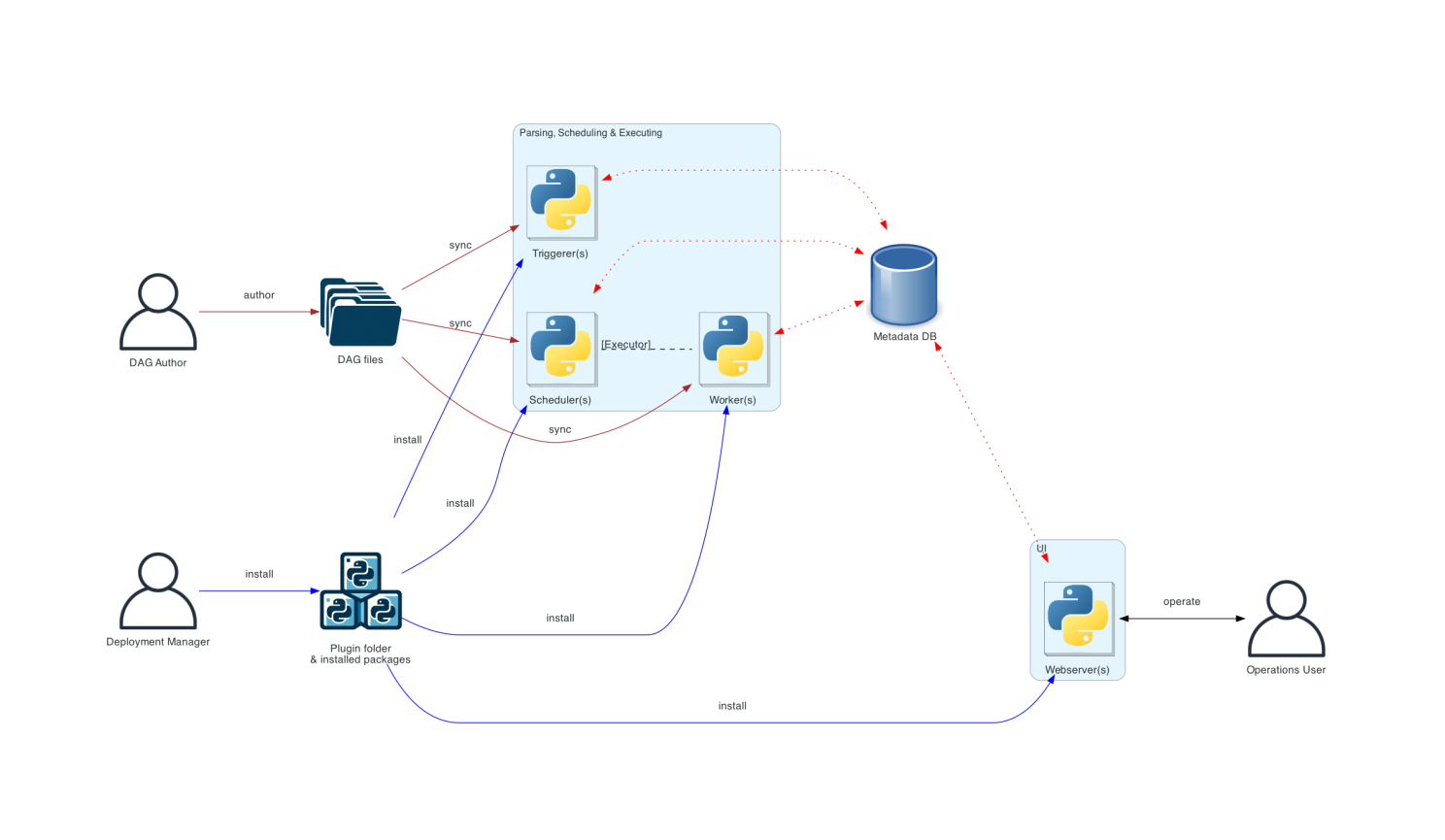

Distributed Airflow Deployment

A distributed Airflow deployment is designed for scalability, security, and multi-user collaboration.

Key Features of a Distributed Setup

- Components run on separate machines: The Scheduler, Workers, Webserver, and Metadata Database are distributed across multiple instances.

- Multiple user roles: Deployment Manager installs and configures Airflow. DAG Author writes and submits DAGs but cannot execute them. Operations user can trigger DAGs but cannot modify them. Enhanced security as the Webserver does NOT have direct access to DAG files. Instead DAG code shown in the UI is read from the Metadata Database.

- The Webserver cannot execute code directly; it only runs installed plugins and packages.

- DAG synchronization across components: DAG files must be kept in sync between the Scheduler, Triggerer, and Workers. Supports Kubernetes & Cloud Deployments – Tools like Helm Charts simplify Airflow deployment on Kubernetes clusters.

This architecture is recommended for production environments where scalability, security, and multi-user collaboration are required.

Wrapping Up

Apache Airflow isn’t just a workflow orchestrator, it’s a game-changer for managing complex data pipelines with ease. By understanding its core concepts, components, and architecture, you can design scalable, efficient, and maintainable workflows tailored to your needs.

Whether you’re just getting started or planning a full-scale deployment, Airflow provides the flexibility to grow with your workflows. So, are you ready to retire your cron jobs and embrace the future of workflow automation?

Read my next blog on Installing Apache Airflow with Docker.